1/24/2025: CrossSim V3.1 has been released, which includes new interfaces with PyTorch and Tensorflow-Keras neural network models. Access on Github.

10/6/2023: CrossSim V3.0 has been released!

About CrossSim

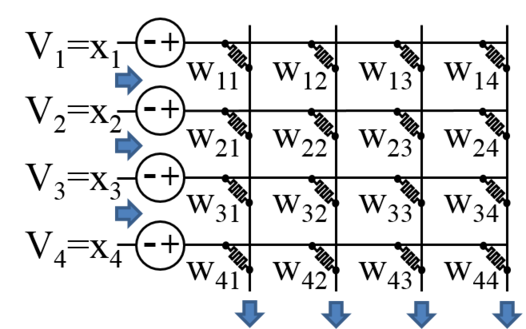

CrossSim is a GPU-accelerated, Python-based crossbar simulator designed to model analog in-memory computing for neural networks and linear algebra applications. It is an accuracy simulator and co-design tool that was developed to address how analog hardware effects in resistive crossbars impact the quality of the algorithm solution. CrossSim provides a special interface to model analog accelerators for neural network inference and training, and also has an API that allows different algorithms to be built on resistive memory array building blocks.

CrossSim can model device and circuit non-idealities such as arbitrary programming errors, conductance drift, cycle-to-cycle read noise, and precision loss in analog-to-digital conversion (ADC). It also uses a fast, internal circuit simulator to model the effect of parasitic metal resistances on accuracy. For neural network inference, it can simulate accelerators with significant parameterizability at the system architecture level and can be used to explore how design choices such as weight bit slicing, negative number representation scheme, ADC ranges, and array size affect the sensitivity to these analog errors. CrossSim can be accelerated on CUDA GPUs, and inference simulations have been run on large-scale deep neural networks such as ResNet50 on ImageNet.

For neural network training accelerators, CrossSim can generate lookup tables of device behavior from experimental data. These lookup tables are then used to realistically simulate the accuracy impact of arbitrarily complex conductance update characteristics, including write nonlinearity, write asymmetry, write stochasticity, and device-to-device variability.

CrossSim does not explicitly model the energy, area, or speed of analog accelerators.

Access CrossSim

CrossSim is developed and maintained on GitHub.

Documentation

More extensive documentation of CrossSim Training and the neural core API will be released in a future update.

Selected Publications Using CrossSim

- M. Spear, J. E. Kim, C. H. Bennett, S. Agarwal, M. J. Marinella, and T. P. Xiao, “The Impact of Analog-to-Digital Converter Architecture and Variability on Analog Neural Network Accuracy” IEEE Journal on Exploratory Solid-State Computational Devices and Circuits, 2023.

- T. P. Xiao, B. Feinberg, C. H. Bennett, V. Prabhakar, P. Saxena, V. Agrawal, S. Agarwal, and M. J. Marinella, “On the accuracy of analog neural network inference accelerators,” IEEE Circuits and Systems Magazine, vol. 22, no. 4, pp. 26-48, 2022.

- T. P. Xiao, B. Feinberg, C. H. Bennett, V. Agrawal, P. Saxena, V. Prabhakar, K. Ramkumar, H. Medu, V. Raghavan, R. Chettuvetty, S. Agarwal, and M. J. Marinella, “An Accurate, Error-Tolerant, and Energy-Efficient Neural Network Inference Engine Based on SONOS Analog Memory,” IEEE Transactions on Circuits and Systems I: Regular Papers vol. 69, no. 4, pp. 1480-1493, 2022.

- S. Liu, T. P. Xiao, C. Cui, J. A. C. Incorvia, C. H. Bennett, and M. J. Marinella, “A domain wall-magnetic tunnel junction artificial synapse with notched geometry for accurate and efficient training of deep neural networks,” Applied Physics Letters, vol. 118, no. 5, pp. 202405, 2021.

- T. P. Xiao, B. Feinberg, J. N. Rohan, C. H. Bennett, S. Agarwal, and M. J. Marinella, “Analysis and mitigation of parasitic resistance effects for analog in-memory neural network acceleration,” Semiconductor Science and Technology, vol. 36, no. 11, pp. 114004, 2021.

- Y. Li, T. P. Xiao, C. H. Bennett, E. Isele, A. Melianas, H. Tao, M. J. Marinella, A. Salleo, E. J. Fuller, and A. A. Talin, “In situ parallel training of analog neural network using electrochemical random-access memory“, Frontiers in Neuroscience, vol. 15, 2021.

- T. P. Xiao, C. H. Bennett, S. Agarwal, D. R. Hughart, H. J. Barnaby, H. Puchner, V. Prabhakar, A. A. Talin, and M. J. Marinella, “Ionizing Radiation Effects in SONOS-Based Neuromorphic Inference Accelerators,” IEEE Transactions on Nuclear Science, vol. 68, no. 5, pp. 762-769, 2021.

- E. J. Fuller, S. T. Keene, A. Melianas, Z. Wang, S. Agarwal, Y. Li, Y. Tuchman, C. D. James, M. J. Marinella, J. J. Yang, A. Salleo, and A. A. Talin, “Parallel programming of an ionic floating-gate memory array for scalable neuromorphic computing,” Science, vol. 364, no. 6440, pp. 570-574, 2019.

- S. Agarwal, D. Garland, J. Niroula, R. B. Jacobs-Gedrim, A. Hsia, M. S. Van Heukelom, E. J. Fuller, B. Draper, and M. J. Marinella, “Using Floating-Gate Memory to Train Ideal Accuracy Neural Networks“, IEEE Journal on Exploratory Solid-State Computational Devices and Circuits, vol. 5, no. 1, 2019.

- C. H. Bennett, D. Garland, R. B. Jacobs-Gedrim, S. Agarwal, and M. J. Marinella, “Wafer-Scale TaOx Device Variability and Implications for Neuromorphic Computing Applications,” in 2019 IEEE International Reliability Physics Symposium (IRPS), 2019.

- M. J. Marinella, S. Agarwal, A. Hsia, I. Richter, R. B. Jacobs-Gedrim, J. Niroula, S. J. Plimpton, E. Ipek, and C. D. James, “Multiscale co-design analysis of energy, latency, area, and accuracy of a ReRAM analog neural training accelerator,” IEEE Journal on Emerging and Selected Topics in Circuits and Systems, vol. 8, no. 1, pp. 86-101, 2018.

- S. Agarwal, R. B. Jacobs-Gedrim, A. H. Hsia, D. R. Hughart, E. J. Fuller, A. A. Talin, C. D. James, S. J. Plimpton, and M. J. Marinella, “Achieving Ideal Accuracies in Analog Neuromorphic Computing Using Periodic Carry,” in 2017 IEEE Symposium on VLSI Technology Kyoto, Japan, 2017.

- Y. van de Burgt, E. Lubberman, E. J. Fuller, S. T. Keene, G. C. Faria, S. Agarwal, M. J. Marinella, A. Alec Talin, and A. Salleo, “A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing,” Nature Materials, vol. 16, no. 4, pp. 414-418, 2017

- E. J. Fuller, F. E. Gabaly, F. Léonard, S. Agarwal, S. J. Plimpton, R. B. Jacobs-Gedrim, C. D. James, M. J. Marinella, and A. A. Talin, “Li-Ion Synaptic Transistor for Low Power Analog Computing,” Advanced Materials, vol. 29, no. 4, p. 1604310, 2017.

- S. Agarwal, S. J. Plimpton, D. R. Hughart, A. H. Hsia, I. Richter, J. A. Cox, C. D. James, and M. J. Marinella, “Resistive memory device requirements for a neural algorithm accelerator,” in 2016 International Joint Conference on Neural Networks (IJCNN), pp. 929-938, 2016.

Contact Us

For questions, feature requests, bug reports, or suggestions on the CrossSim software, please submit a new issue through GitHub. For other questions or if you would like to contribute to the source code, please e-mail Ben Feinberg, T. Patrick Xiao, Curtis Brinker, Christopher Bennett, or Sapan Agarwal.